Have you ever worked with financial data trapped in Excel files? If you're like me, you've probably experienced the frustration of dealing with scattered spreadsheets, manual updates, and the constant worry about data accuracy. After facing these challenges repeatedly in my professional roles, I decided to build a solution that would address these pain points – and that's how this project was born.

In my experience working with financial data at companies like MediaMarktSaturn and Flix SE, I noticed a common pattern: important financial information was often scattered across multiple Excel files, making it difficult to maintain consistency and track changes. The reporting process was manual, time-consuming, and prone to errors. I thought, "There must be a better way to handle this."

The Problem with Traditional Financial Reporting

This is a challenge many finance departments face. They have valuable data but lack the infrastructure to transform it into actionable insights efficiently. I wanted to create a project that would demonstrate how finance teams can transition from these legacy approaches to a more scalable, transparent solution.

My Approach: Building a Modern Data Stack

I decided to build a complete end-to-end financial data pipeline that would simulate how a company could modernize their financial reporting. The goal was to create something practical that showcased both technical skills and business understanding.

Here's how I approached it:

- Data Generation: First, I created a Python script to generate realistic synthetic financial transaction data. This simulated the kind of information you'd typically find in an ERP system – invoices, payments, customer data, etc.

- Database Setup: I used PostgreSQL (running in Docker) to store this raw data, similar to how a company would have a central database for their financial information.

- Data Transformation: This is where it gets interesting! I implemented dbt (data build tool) to transform the raw financial data into clean, analysis-ready models. dbt allowed me to create a series of transformations that mirror how financial data is typically processed – from raw transactions to aggregated financial statements.

- Testing & Documentation: I added data quality tests to ensure the numbers were accurate and documented the entire process, making it easy for others to understand the data lineage.

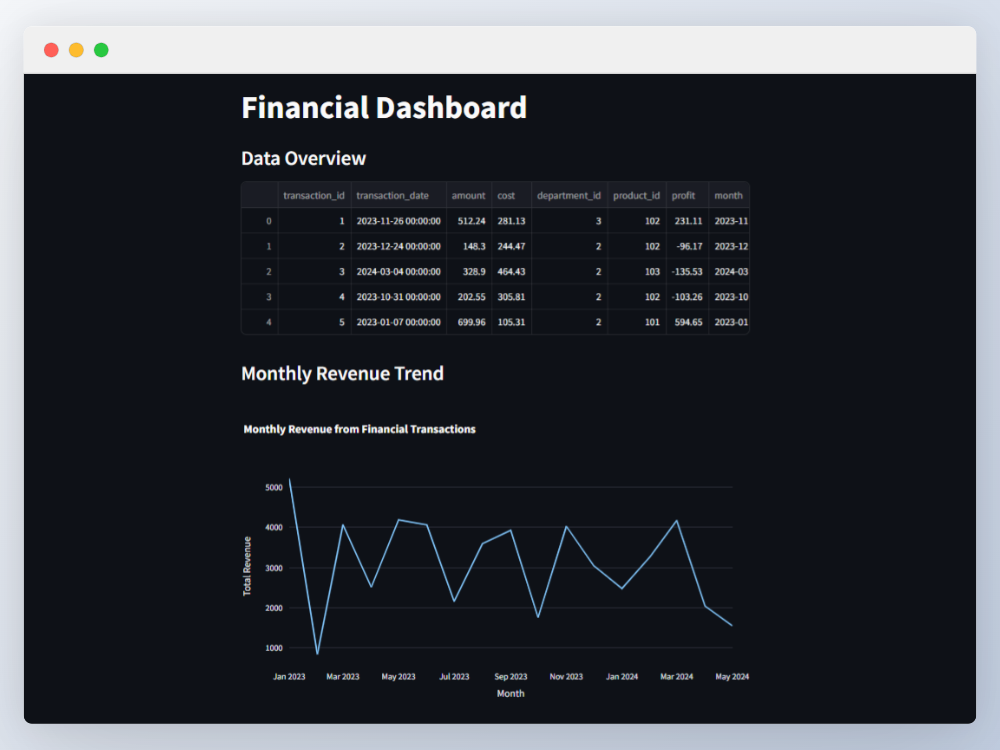

- Visualization: Finally, I built interactive dashboards using Streamlit and Plotly to visualize key financial metrics and KPIs.

Technical Challenges and Learnings

Building this project wasn't without challenges. Some of the technical hurdles I faced included:

- Generating Realistic Data: Creating synthetic financial data that actually makes sense from a business perspective was harder than I expected. I had to ensure transactions balanced correctly and followed realistic patterns.

- dbt Modeling: Designing the right transformation models required both technical skills and financial knowledge. I needed to understand how raw transactions should flow into financial statements.

- Docker Configuration: Setting up a reproducible environment with Docker took some trial and error, but it was worth it to create a solution others could easily run.

The most valuable learning was seeing how modern data tools can dramatically improve financial reporting processes. What would take days of manual work in Excel can be automated into a pipeline that refreshes in minutes.

The Results: From Raw Data to Financial Insights

The final result was a complete financial reporting system that takes raw transaction data and transforms it into actionable business insights. The dashboard provides:

- Revenue analysis by product, region, and time period

- Customer profitability metrics

- Cash flow projections

- Expense breakdowns

- Financial KPI tracking

What I'm most proud of is how the project demonstrates the entire data lifecycle – from raw data ingestion to final visualization. It shows not just technical skills but also how those skills can be applied to solve real business problems.

Why This Matters for Finance Teams

This project isn't just a technical exercise – it represents a practical path forward for finance departments looking to modernize their reporting processes. The benefits of this approach include:

- Automation: Eliminate manual data processing and reduce human error

- Version Control: Track changes to financial calculations and models

- Data Quality: Implement tests to catch issues before they impact reports

- Transparency: Create clear documentation of how financial metrics are derived

- Scalability: Handle growing data volumes without restructuring the entire process

In my experience, many finance teams are interested in these improvements but aren't sure where to start. This project provides a concrete example they can learn from.

Next Steps and Future Improvements

While I'm happy with what I've built so far, there are several enhancements I'm considering for the future:

- Adding more advanced financial metrics and forecasting models

- Implementing CI/CD pipelines for the dbt models

- Creating a more sophisticated data quality monitoring system

- Expanding the dashboard with more interactive features

I'd love to hear from others who have worked on similar projects or from finance professionals who are dealing with these challenges. Feel free to check out the GitHub repository and share your thoughts!

Key Technologies Used:

Python | Pandas | SQLAlchemy | PostgreSQL | Docker | dbt | Streamlit | Plotly

Build With Me: Interactive Data Visualization

Want to try creating a simple data visualization? Here's a basic bar chart example using HTML and CSS. Feel free to modify the values and see how the chart changes!

Monthly Revenue (2024)

Adjust Values:

Customize:

Note: This interactive example demonstrates how data visualization can make financial information more engaging and easier to understand. In this project, I used similar techniques to transform raw financial data into actionable insights.